欢迎报考研究生(2024级招新)或申请博士后或专职科研人员/opening for students or post-docs, please contact [email protected]

长期招募大二或以上且对神经网络的工作原理有纯粹探索兴趣的本科生。

PMI's Landmark

If you want to know us, please go through these papers.

1. Entropy landscape of solutions in the binary perceptron problem (2013)

2. Origin of the computational hardness for learning with binary synapses (2014)

3. Statistical mechanics of unsupervised feature learning in a restricted Boltzmann machine with binary synapses (2017)

4. Minimal model of permutation symmetry in unsupervised learning (2019)

5. Weakly correlated synapses promote dimension reduction in deep neural networks (2021)

6. Associative memory model with arbitrary Hebbian length (2021)

7. Eigenvalue spectrum of neural networks with arbitrary Hebbian length (2021)

8. Spectrum of non-Hermitian deep-Hebbian neural networks (2022)

9. Statistical mechanics of continual learning: variational principle and mean-field potential (2022)

Our goal

The research group focuses on theoretical bases of various kinds of neural computation, including associative neural networks, restricted Boltzmann Machines, recurrent neural networks, and their deep variants. We are aslo interested in developing theory-grounded algorithms for real-world applications, and relating the theoretical study to neural mechanisms. Our long-term goal is to uncover basic principles of machine/brain intelligence using physics-based approximations. The plenary talk at the 6th CACSPCS about the scientific questions of machine learning is out here. A complete story about the mechanism of unsupervised learning is out here. My CV is HERE.

- 第二届神经网络新年交叉论坛即将于2022年12月31日举办(线上),欢迎申请学生报告,见会议网站。

- Promoted to Full Professor in Apr 27, 2022

- 2022秋季《神经网络的统计力学》在线课程 (2022-09~2023-06)。正式学员62名,学生专业背景覆盖数学、物理、计算机、心理学、认知科学等。课程特色:学以致用+前沿探索+思想碰撞(creative learning)。第二季招募新学员,2月8日截止,附简历到[email protected]

- 实验室的科普公众号“PMI Lab”开始使用

- The first New-Year-Forum of interdisciplinary studies of neural networks will start in Jan 3, 2022.

- We are organizing a regular (e.g. monthly) on-line seminar "INTheory", focusing on exchange of ideas about the interplay between physics, machine learning and brain sciences. If you are interested in giving us a talk, please contact me!

- My book: Statistical Mechanics of Neural Networks (SMNN) has been published on line, see Kindle eBooks and Hardcover in Amazon. The hardcover version has been published by Springer (oversea version) and higher education press (mainland version, 可在京东商城购买或参看高教社推文). eBook in Springer Link.

- A review of statistical physics and neural networks was just published in 《科学》(上海科学技术出版社,1915年创刊)2022年74卷01期40页,pdf is HERE, an on-line read of the text is HERE. Viewpoints On promotion of interdisciplinary studies.

News

- We propose a statistical mechanics theory of continual learning, see the link.

- We develope a random matrix theory of sequence learning and replay in this recent work. To appear in Phys Rev Research!

- We propose the mode decomposition learning that is a faster and more transparent deep-learning framework. See preprint Here.

- An ensemble perspective is provided for temporal credit assignment, details are HERE. To appear in Phys Rev E!

- Invited speaker at CPS2022 (Nov 17-20, SUSTECH).

- Invited speaker at Autumn School on "Machine Learning and Statistical Physics" (Yunnan University, Oct 14~27, 2022).

- Invited talk at China Society for Industrial and Applied Mathematics (Sept 22-25, 2022, Guangzhou).

- Invited talk at Collective Dynamics and Networks (June 10-12, 2022, DUKE KUNSHAN)

- We recently proved the equivalence between belief propagation instability and transition to replica symmetry breaking in perceptron learning systems; the paper was just accepted by Phys Rev Research, arXiv version HERE.

- I was recently invited to be a speaker of virtual seminar series in computational and system neuroscience in Research Centre Jülich, Germany (June 1st, 2022).

- I was recently invited to give a talk in statistical physics session of 15th Asia Pacific Physics Conference (Gyeongju, South Korea on August 21-26, 2022).

- An associative memory model of arbitrary Hebbian length is proposed HERE, establishing the connection between Hebbian learning, unlearning (or dreaming), and the temporal-to-spatial correlation conversion in the brain. The eigenvalue-spectrum of the neural couplings is also provided HERE. Both papers will be published by Phys Rev E in a side-by-side form!

- Invited as Program Committee of MSML22 (International conference on mathematical and scientific machine learning) (Nov, 2021)

- Our recent work "data-driven effective model shows a liquid-like deep learning" was just accepted by Phys Rev Research.

- I was awarded the Excellent Young Scientists Fund by the NSFC of China (August, 2021).

- I was invited to give a Plenary Talk at the 6th Chinese annual conference on statistical physics and complex systems (July 2021, Jilin University)

- Chan Li won the 3-min talk competition organized by IOP press.

- I was invited to give an on-line seminar at the institue of statistical mathematics in Japan (Jan 2021).

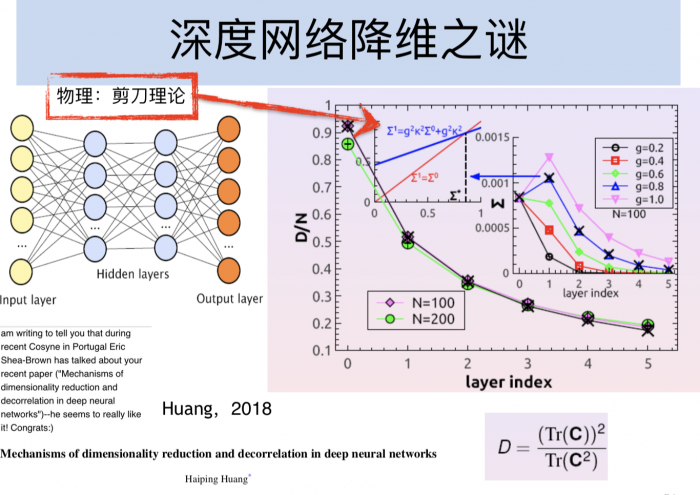

- Our recent theory shows that weakly-correlated synapses can not only accelerate dimension reduction along deep hierarchy but also slow down the neural decorrelation process; the scaling of synaptic correlation can be uniquely determined by our theory. See details HERE. Published in Phys Rev E (open access).

- We proposed recently a generalized backpropagation to learn credit assignment leading to decision making of deep neural networks, from a network ensemble persepective. See the arXiv for details. Video of this work. This paper will appear in Phys Rev Lett. 京师物理 InfoMat 前沿推送

- We solved recently the challenging problem of traning a RBM with discrete synapses, with a single equaiton unifying three essential elements of learning: sensory inputs, synapses and neural activity. See the arXiv for details. The paper will appear in Phys Rev E as a Rapid Communication. codes available HERE.

- Our new results on statistical physics of unsupervised learning with prior knowledge in neural networks have been submitted to arXiv. It demonstrates the computational role of priors in learning by an analytic argument: priors significantly reduce the necessary data size to trigger concept-formation, and merges, rather than separates, permutation symmetry breaking phases, compared with the prior-free scenario. This work will appear in Phys Rev Lett. A video link to basics of this work is present HERE. NO 中文报道 InfoMat 前沿推送 京师物理科普公众号推送

- From Oct 4-6, I organize an international workshop "statistical physics and neural computation" (SPNC-2019) at School of Physics, Sun Yat-sen University, for which the website is ready. If you want to attend the workshop, please write to me. The website inforamtion keeps being updated. Videos of all lectures are HERE.

- I was invited to give a talk at the annual conference of Chinese complex systems society (2019, Shang Hai), July 12-14, 2019.

- I was invited to give a talk "minimal data size to trigger concept formation in neural networks: from mean-field theory to algorithm", at the 5th conference on condensed matter physics (statistical physics section), June 27-30, 2019.

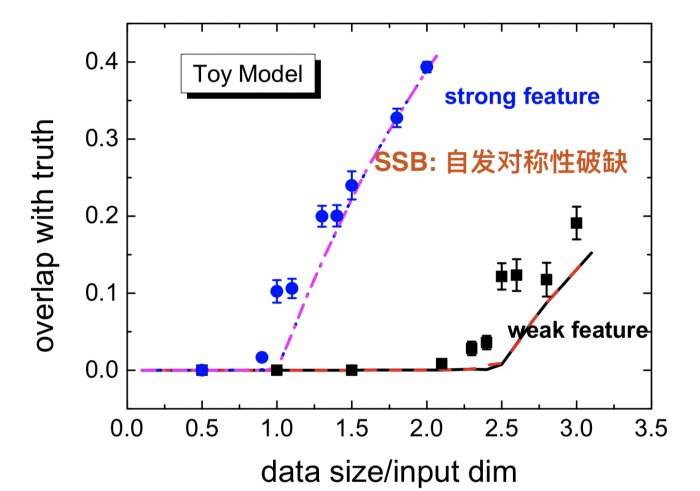

- We will announce our new theoretical study of unsupervised learning soon (surprising results about the transition data size)! See our recent preprint "Minimal model of permutation symmetry in unsupervised learning"----We analytically prove that the learning threshold that triggers a spontaneous symmetry breaking (concept-formation) does not depend on the number of hidden neurons (here two for a minimal model) once this number is finite. Moreover, our analytical result reveals that the weak correlation among receptive fields of hidden neurons significantly reduces the learning threshold, which is consistent with the non-redundant weight assumption popular in system neuroscience and machine learning!

- Haijun Zhou will visit us and give a seminar on his recent work about active on-line learning, in Jan 14, 2019.

- I was invited to contribute (invited contributor) to a special issue "statistical physics and machine learning" in J. Phys. A.

- I will give an invited talk in the international conference at Beijing, from Nov 2 to Nov 4.

- Our theoretical work on statistical properties of deep networks under weight removal perturbations is updated on arXiv: 1705.00850. The new version clarifies a first-order phase transition separating a paramagnetic phase from a spin glass phase, which may connect to robustness of the deep networks and performance of the dropconnect algorithm when different dropconnect probabilities are used. To be published by Phys Rev E (2018)

- A theory about deep computation was just posted on arXiv: 1710.01467. to be published by Phys Rev E (2018)

Referee Services

Physical Review Letters (acrv 20; rjrv 2), Nature Communications (1), Phys Rev X (3), eLife (1), Physical Review E (18), Phys Rev B (2), Phys Rev Research (1), PLoS Comput Biol (2), Communication in Theoretical Physics (4), Journal of Physics: Conference Series (2), Journal of Statistical Mechanics: Theory and Experiment (8), Journal of Physics A: Mathematical and Theoretical (7), J. Stat. Phys (1), Eur. Phys. J. B (1), Neural Networks (2), Scientific Reports (2), Network Neuroscience (1), Frontiers in Computational Neuroscience(1), Neurocomputing (1), MLST (1), Physica A (3), IEEE Transactions on Neural Networks and Learning Systems (2), Chin. Phys. Lett (1), Chin. Phys. B (2), Chaos (1)

Program Committee of international machine learning conferences

Mathematical and Scientific Machine Learning (MSML2022)

Referee Services for Phd thesis

---Sydney University (2022)

Referee Services for Grant Proposals

Honors and awards

- Aug 2021 Excellent Young Scientists Fund, NSFC of China

- Nov 2020 Fulan Research Incentive Award, School of Physics, SYSU

- Mar 2017 8th RIKEN Research Incentive Award, RIKEN

- Jan 2012 JSPS Postdoctoral Fellowship for Foreign Researchers, Japan Society for the Promotion of Science (JSPS)

Grants

- 中山大学百人计划青年学术骨干启动经费(2018-2019)

- 中山大学高层次国际会议专项资助《统计物理与神经计算国际研讨会(SPNC-2019)》(2019)

- 国家青年科学基金项目:神经网络无监督学习的相关统计物理研究 (2019-2021)

- 国家优秀青年基金项目:神经网络的统计物理(2022-2024)

Teaching

- General Physics, for mathematics and applied mathematics undergraduate students (2018 Fall)

- Thermodynamics and Statistical Physics, for physics undergraduate students (2018-2021 Fall)

- College Physics, for computer science, mathematics, psychology undergraduate students (2019-2021 Spring)

- Nonlinear physics and complex systems, for physics undergraduate students (2022 Spring)

- Statistical Mechanics of Neural Networks, to be scheduled (2023 Fall); on line course 2022 Fall

Our previous representative achievements

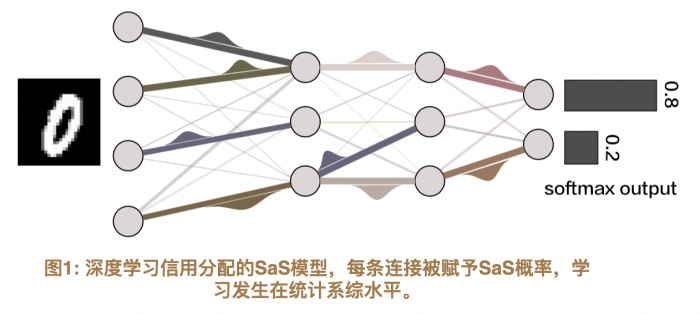

我们研究小组的一个简短介绍:我与合作者于2014年理论上给出了感知学习计算困难性的物理起源 (被2016年昂萨格奖得主多次引用);2015年率先研究了受限玻尔兹曼机的统计力学,进而在2016到2017年间提出了无监督学习的最简单物理模型,引起了同行的广泛关注;2016年也提出了视网膜神经编码的相变理论,并被普林斯顿大学实验小组从不同角度证实。2017年至2018年间,我们构建了深度神经网络降维和退相关的物理模型并阐述了其机制 (被Cosyne 19大会主题报告推荐)。2019年, 我们理论上证明了包含有限隐层神经元的无监督学习自发对称性破缺的相变数据量并不依赖于隐神经元的数目,并且隐神经元接受野的(弱)关联显著降低相变(概念形成)的数据量(达50%以上)!更重要的,该理论预言了无监督学习本质上是数据流驱动了一系列对称性破缺(自发对称性破缺,两重交换对称性破缺)(物理评论快报发表了该理论)。同年,我们提出了带离散权重的受限玻尔兹曼机的训练算法,并且把无监督学习三必要元素---感知输入,神经突触和神经元状态纳入单一物理方程(物理评论E快速通讯发表了该理论)。2020年我们还提出了深度学习的信用分配物理模型(物理评论快报发表了该结果). 本研究组长期从统计物理角度关注神经计算的理论基础.

You can download these papers from arXiv (links to these published versions are also given there). Codes are HERE (under construction).

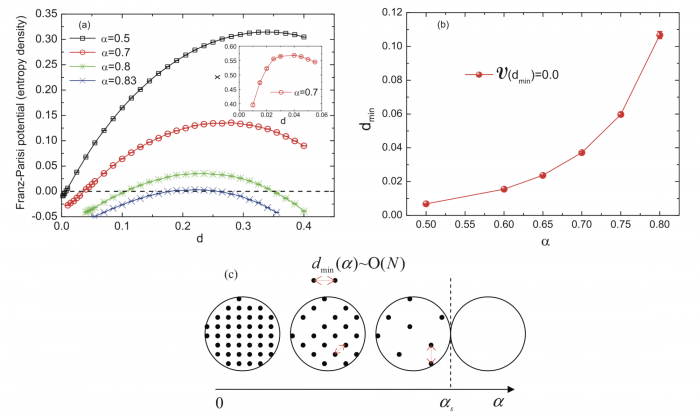

a. Origin of the computational hardness of the binary perceptron model (2014)

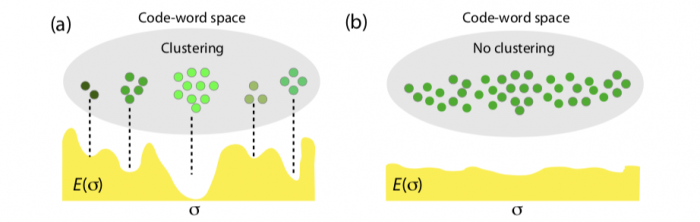

b. A first-order phase transition reveals clustering of neural codewords in retina (2016)

c. Unsupervised feature learning has a second-order phase transition at a critical data size (2016, 2017)

d. Theory of dimension reduction in deep neural networks (2017, 2018)

e. Minimal model of permutation symmetry in unsupervised learning (2019)---weakly-correlated receptive fields significantly reduce the data size that triggers the concept formation (spontaneous symmetry breaking predicted in our previous works (2016, 2017));unsupervised learning in neural neworks is explained as breaking a series of symmetries driven by data streams (observations)! We further demonstrate in a new preprint arXiv: 1911.02344 the computational role of prior knowledge in unsupervised learning, by claiming that the piror further reduces the minimal data size and reshapes inherent-symmetry broken transitions.

f. A variational principle for unsupervised learning interpreting three elements of learning (2019, arXiv:1911.07662):

Learning in neural networks involves data, synapses and neurons. Understanding the interaction among these three elements is thus important. Previous studies are limited to very simple networks with only a few hidden neurons, due to challenging computation obstacle. Here, we propose a variational principle going beyond the limitation of previous studies, being capable of treating arbitrary many hidden neurons. The theory furthermore interprets the interaction among data, synapses and neurons as an expectation-maximization process, thereby opening a new path towards understanding the black-box mechanisms of learning in a generic architecture.

g. A statistical ensemble model of deep learning (PRL 2020)

Research for what?

“Why should we study this problem, if not because we have fun solving it?"---Nicola Cabibbo (known for Cabibbo angle, and one of his students is Giorgio Parisi)

“If you don't work on important problems, it's not likely that you will do important works”---Richard Hamming

Supervision of Bachelor Thesis

2019:4 students, among them Mr Quan scored 91

2020: 6 students, among them Ms Li scored 95, graduated with honor

2021: 4 students, among them Mr Chen scored 95, best thesis of SYSU

2022:3 students, among them Mr Zou scored 91.6

Supervision of Master Thesis

2021: Jianwen Zhou, applications of statistical physics to neural dimensionality reduction and associative memory of arbitary hebbian length

Prizes won by students

2022,三星奖学金,邹文轩

2021, 国家奖学金/National Scholarship and CN Yang (杨振宁)prize,Chan Li

2021, 芙兰优秀研究生奖学金,蒋子健

2021, 第六届全国统计物理与复杂系统学术会议最佳海报, 李婵

2021, 第三届中国计算与认知神经科学大会最佳海报二等奖/Chinese conference on computational and cognitive neuroscience best poster, 邹文轩

2020, Master Student Entrance Prize, Chan Li

2020, Fulan Master Student Prize, Wenxuan Zou

2020, Three-min Talk Competition Prize (Organized by IOP press), Chan Li, Talk Title: Learning Credit Assignment

2020, Best Poster Prize, Annual Physics Conference in Guang-dong Province, Chan Li

Former Members

2018-2020, Dongjie Zhou (Chinese Academy Science, Shanghai, Phd)

2018-2020, Zhenye Huang (Chinese Academy Science, Beijing, Phd)

2018-2020, Nancheng Zheng (Company, Guangzhou)

2018-2020, Tianqi Hou (Now at HUAWEI theory lab Hong Kong)

2019-2021, Zimin Chen (Tsinghua University, Phd)

2018-2021, Jianwen Zhou (ITP, CAS, Beijing, Phd)

2020-2022,Yang Zhao

If you want to become a member of PMI, please pay attention to the following two questions:

1. Are you really interested in theory of neural networks?

2. Are you self-confident in (the potential of) your math and coding ability?